Boring Semantic Layer + MCP = 🔥

Ju Data Engineering Weekly - Ep 88

Bonjour!

I'm Julien, freelance data engineer based in Geneva 🇨🇭.

Every week, I research and share ideas about the data engineering craft.

Not subscribed yet?

Last week we launched the boring semantic layer.

The Boring Semantic Layer (BSL) is a lightweight semantic layer built on top of Ibis.

I love ❤️ it because:

It’s super light: pip install boring-semantic-layer

It’s Ibis-powered — meaning it works with almost any engine out of the box

Today, I want to explore into how this connects with the MCP integration I mentioned in the launch post.

I started exploring the semantic layer space while experimenting with LLM interfaces for data.

If you take a brute-force approach and let an LLM query your raw tables directly, you’ll quickly run into hallucinations — things like:

Wrong joins

Bad aggregations

The semantic layer serves as an interface that defines pre-built aggregations and validated relationships — helping prevent those kinds of issues.

Take the flights example: we have two tables, flights and carriers.

Rather than exposing those raw tables to the LLM, we expose ready-to-query aggregations, such as:

In this setup, the LLM can only aggregate the number of flights per origin and destination — nothing more.

That constraint is a feature, not a bug: it allows the LLM to focus on intent, not SQL correctness.

You’re trading SQL flexibility for reliability.

MCP

MCP is a protocol designed to standardize how LLMs interact with external tools.

Each MCP exposes a set of so-called “tools” — think of them as API endpoints with predefined inputs and outputs.

When a user asks a question, LLMs are now trained to browse the available MCPs and identify the one they can use to handle the request.

Python MCP

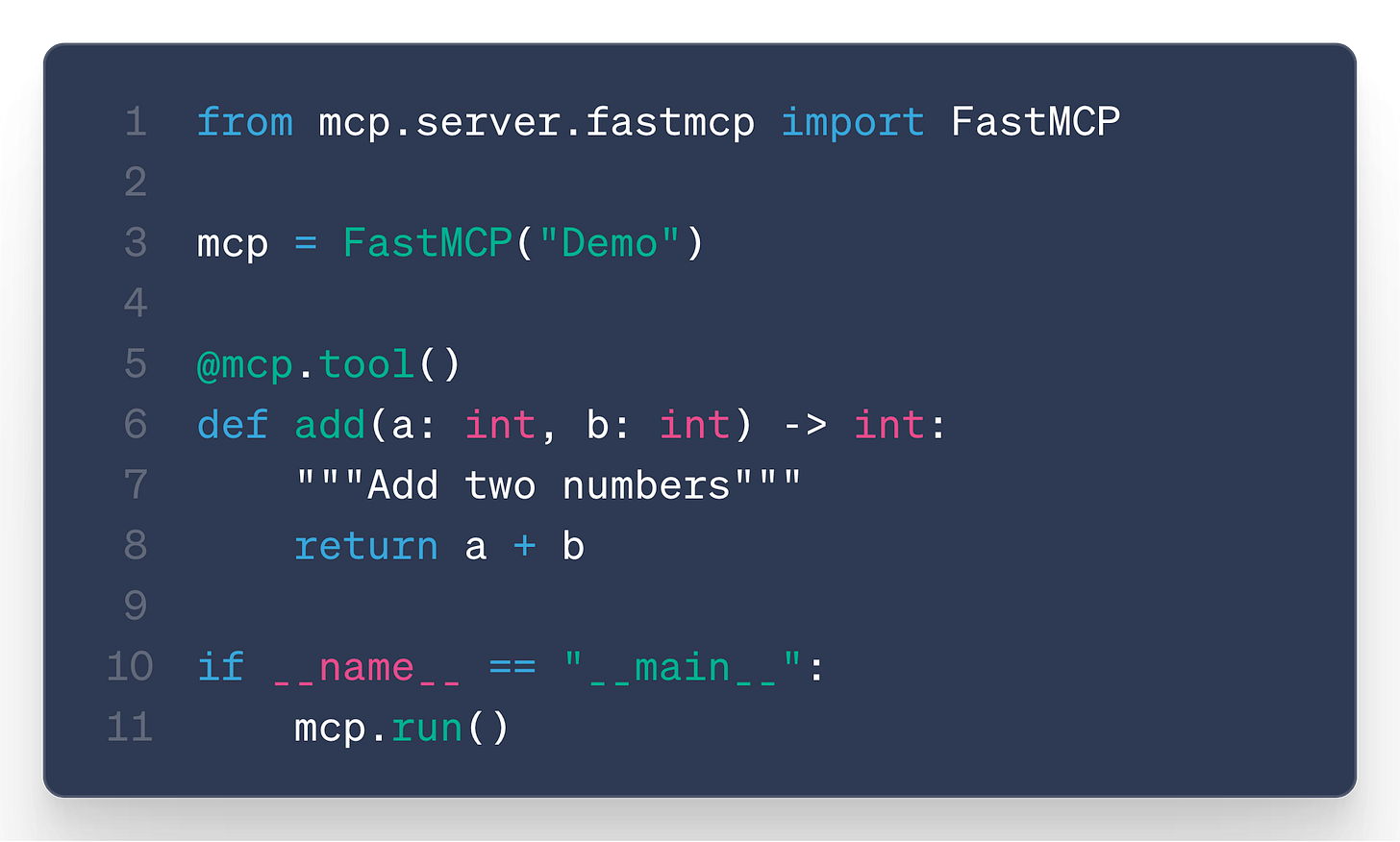

Anthropic has released a Python SDK that implements the MCP protocol.

It allows you to build an MCP server that can be exposed to a client.

A client, in this context, could be Cursor, Claude Desktop, Claude Code, etc.

Using the @tool decorator, you can easily add tools to the MCP server.

When an LLM scans the MCP, it will see each tool along with its docstring documentation.

The docstring is crucial — it helps the LLM understand what the tool does and how to structure the input correctly.

Semantic Layer + MCP

In the context of our semantic layer, MCP acts as a bridge between the LLM and the Boring Semantic Layer.

Here’s how it works:

The user asks a question to the LLM.

The LLM scans the available tools and calls the appropriate MCP tool with a corresponding query.

The MCP forwards the query to the Boring Semantic Layer, which translates it into SQL.

The results flow back to the LLM, which then formats the response in natural language.

BSM: SemanticMCP

To enable a smooth integration between the Boring Semantic Layer (BSL) and an MCP, we introduced a new class this week: MCPSemanticModel.

It inherits from the original FastMCP class by Anthropic and extends it with a set of built-in tools that the LLM can use to query your data.

To use it, simply pass your semantic model as input to the MCPSemanticModel class.

Run this file, add the MCP to your client and that’s it !

The client’s LLM will be able to access your semantic layer.

(Check the demo section below if you want to try it yourself.)

The MCP server comes with a set of pre-implemented tools:

list_models – Returns the list of available semantic models.

get_model – Fetches the definition of a specific semantic model.

get_time_range – Retrieves the time range covered by a model’s data.

query_model – Executes a query against a semantic model.

Each tool includes a proper docstring, which acts as a prompt for the LLM.

This prompt is especially important for tools like query_model.

In the prompt, we explain to the LLM how to format its queries: the JSON format for filtering, the available time ranges, and more.

The beauty of this setup is its flexibility.

The MCPSemanticModel is just an instance of FastMCP.

You can enhance the MCPSemanticModel class as you wish: add new tools, add prompts, add resources, and more.

Demo

There is a ready-to-use MCP implementation in the repo here.

Just add this config to your Claude Deskop MCP config file:

Nothing to install (except uv) !

{

"mcpServers": {

"flight_sm": {

"command": "uv",

"args": [

"run",

"https://raw.githubusercontent.com/boringdata/boring-semantic-layer/refs/heads/main/examples/example_mcp.py"

]

}

}

}That’s it! Open your Claude Desktop, and you can start chatting with the flight data.

Let’s start by asking a simple question:

“top 10 flights destinations”

If you look at the MCP call, the LLM goes through the following steps:

Lists all the available models (datasets)

Retrieves more details about the flights model

Performs a query

Now, let’s add a time dimension to the prompt:

“Analyze the quarterly flight number evolution in Boston.”

In this case, the model also uses get_time_range to verify the available date range in the dataset before running the query.

And then leverage the time_grain in the query() to aggregate data correctly.

Claude even generated a nice dashboard for us:

From the tests we ran, the LLM generally understands the prompts we provide about how to query the data model.

It does make occasional mistakes — for example: “Error executing tool query_model: Operator ‘in’ requires ‘values’ field.”

But what’s impressive is that it learns from the error message and often manages to correct itself in the next attempt.

Next Step

You got it — the LLM is only as good as your semantic model.

If a measure isn’t exposed, the LLM won’t be able to retrieve the information.

In that sense, building the semantic model is becoming the new bottleneck in the analytics process.

But wait…

Could the LLM help us build the semantic model itself?

Does it have to be an ad-hoc process?

What if we could build it incrementally, as users ask questions?

That’s exactly what we’ll explore with Benoit Pimpaud in the next edition of the newsletter.

Stay tuned!

We’d love to hear your feedback and welcome contributions — and don’t forget to ⭐ the project!

Thanks for reading,

-Ju

The best breakdown of semantic layer and MCP interaction I’ve seen to date. I’ll be spending some time with this.

I have also been working with Rill’s MCP server and liking it. However its semantic layer is baked into the tool (a point you brought up when building the boring semantic layer).

Do you have any concerns about Ibis becoming stale as a library? Most of the folks maintaining it at Voltron Data have moved on and wondering if it has a PMC.

Very cool, thanks for sharing