Today’s post is not typical for this newsletter.

I'm excited to announce the launch of a project I've been working on for the past few months (and, in some ways, the past decade): Boring Data.

Boring Data is a set of Terraform templates designed to help you kickstart your data stack quickly.

It distills my decade of data engineering experience into a single repo.

In this post, I’d like to briefly explain what led me to build this product and what it includes.

Problem: Building a stack is risky

After having built several platforms for startups and corporates, I’ve noticed the same recurring problems:

There are so many moving pieces—how do you plan it efficiently?

Data stack projects are challenging because many complex pieces must be executed correctly.

This creates uncertainty around the time, expenses, and headcount required for implementation.

Such uncertainty makes these projects particularly risky, especially since the ROI isn't often clear at the outset.

Which tools to use?

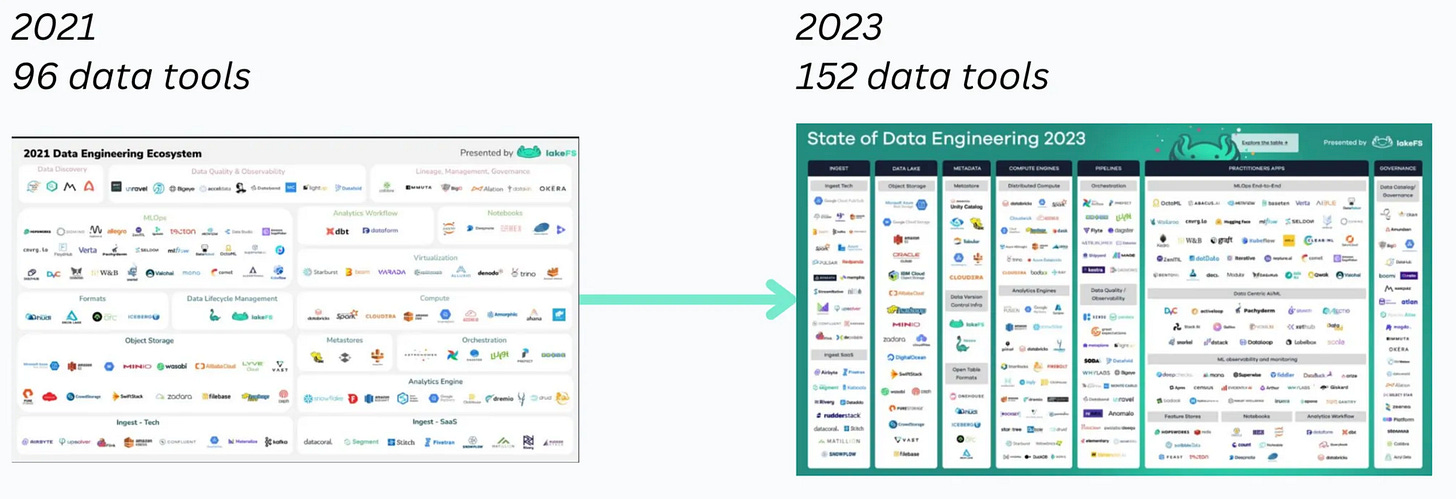

The data ecosystem evolves super fast:

How do you choose the right tool without getting trapped in endless proof-of-concepts—or worse, having to migrate again in six months?

Staff diversion: how to find time?

Building a stack requires developing internal knowledge and skills.

But where can you find the time when your team is busy with daily operations?

Solution: Data Stack Onboarding Package

After months of reflection, here's the solution I've developed — it combines templates with workshops to uplevel your team:

TL;DR:

Buy expertise in one click with Boring Data templates.

Build internal skills with Boring Data workshops.

Why Templates?

Building a data stack is like setting the right trajectory for an airplane.

Having a solid design from the start saves long-term maintenance costs and prevents the need for complete migrations later.

For that reason, building a stack is mostly a one-shot problem, and I’m convinced templates are the perfect fit for this:

• Get started fast – Skip months of trial and error.

• Pay once, own it forever – No SaaS lock-in.

• Full control – Deploy and customize the code however you want.

Our templates ensure your stack is on the right trajectory from the start:

Why Workshops?

I understand that Terraform, AWS, and Iceberg can feel daunting.

That's why I offer workshops alongside the templates.

These sessions help you understand the craftsmanship behind the templates and equip you to manage your platform independently.

Who is this for?

Boring Data has been designed primarily for:

• Data teams launching or migrating their stack (e.g., moving to Iceberg)

• Data freelancers looking to speed up projects

• Learners who want hands-on experience with a production-ready setup

Template Library

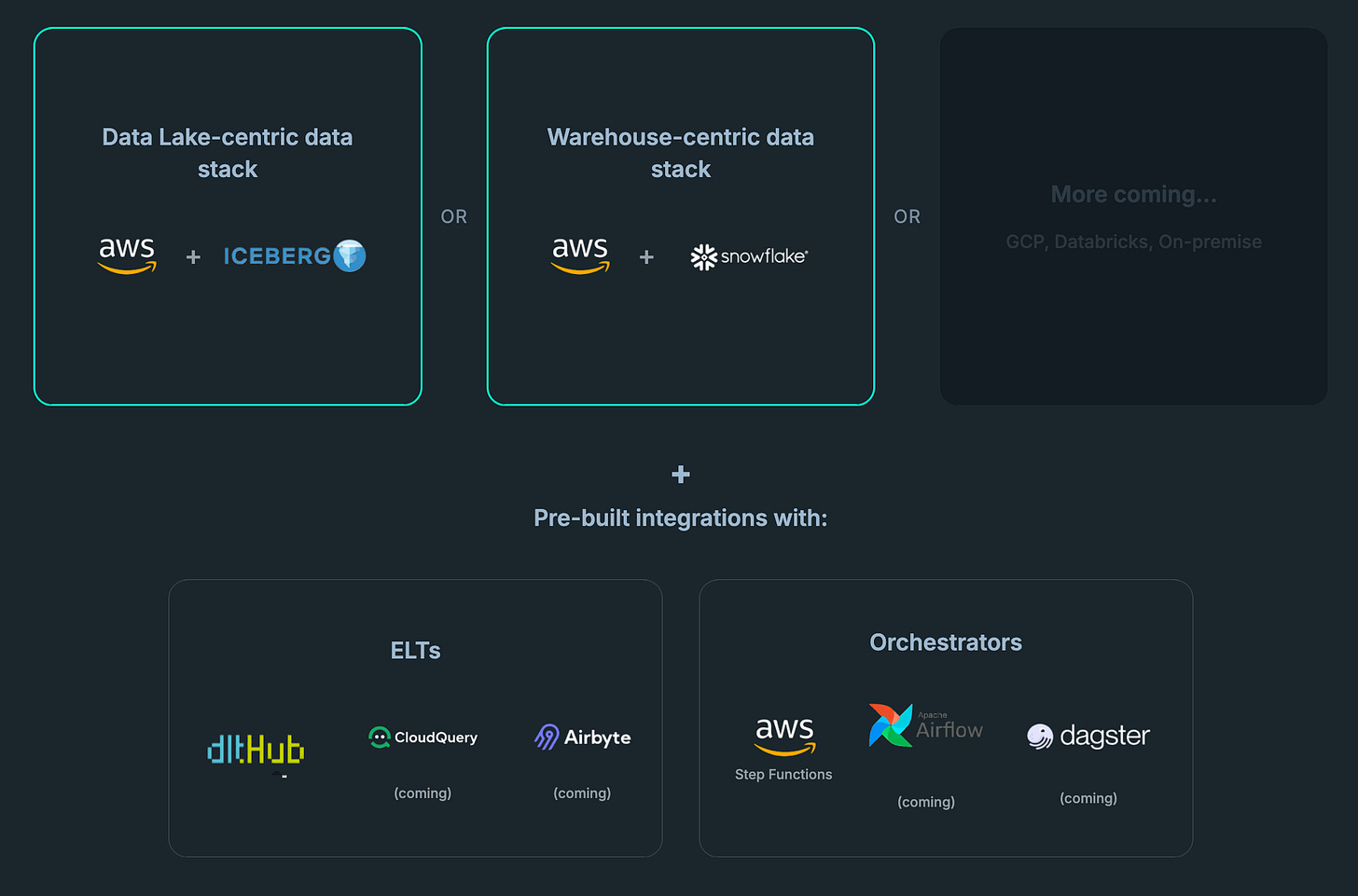

Our templates have a simple structure:

• A backbone built for a specific platform (cloud provider or cloud warehouse)

• A CLI that generates code and allows you to integrate ELT tools, orchestrators, and transformation frameworks with just one command

1 template = 1 backbone + integrations with tools

Currently, our library contains two templates:

Data warehouse-centric: AWS + Snowflake

+ an example pipeline combining dlt (ELT), dbt (transformation), and step function (orchestration)

Data lake-centric: AWS + Iceberg

+ an example pipeline combining dlt (ELT), dbt on Athena (transformation), and step function (orchestration)

These two templates come with a GitHub CI workflow.

See it in action:

Pricing

💲 Launch price: $1,500 per template (building it from scratch would take at least a month)

📆 Includes one year of updates

⚡ Only 25 licenses at this price

We recognize that boring data is a new way of working and is not yet common in the data world.

Therefore, we offer a 7-day money-back guarantee if you are unsatisfied.

Workshop pricing is available upon request, as costs vary depending on the modules needed.

Next Steps

Our roadmap is packed with exciting additions:

More template backbones: GCP, Databricks, etc.

More integrations: additional ELT tools (Airbyte, Cloudquery), SQLMesh, Airflow, Dagster, and more.

And, of course... AI

AI struggles with writing more than 20 lines of code at a time: the context becomes too large, and LLMs need significant constraints to avoid hallucination.

Our templates and code-generating CLI are perfect for constraining an AI agent.

We believe the future of data engineering will be:

80% template/code generation

10% AI agent

10% human

If you want to know more or have any questions, you can reply to this email directly.

If you'd like to show your support, I'd greatly appreciate it if you could engage with the announcement post on LinkedIn.

Thank you so much for your support!

Happy (boring 😎) data stack building.

Ju