Companies want to use data everywhere: marketing, sales, operations.

However, skills, toolsets, and needs vary widely among consumers.

Some prefer simple drag-and-drop dashboards, while others require easy data transformation capabilities, and most technical users want access to raw data.

How can we address this broad range of requirements while maintaining scalability when building a data platform?

Federico and I discussed this topic a few weeks ago and realized we encountered the same challenge in our projects.

We decided to collaborate on this post to share our experiences and propose potential solutions to this problem.

Modeling data for various use cases

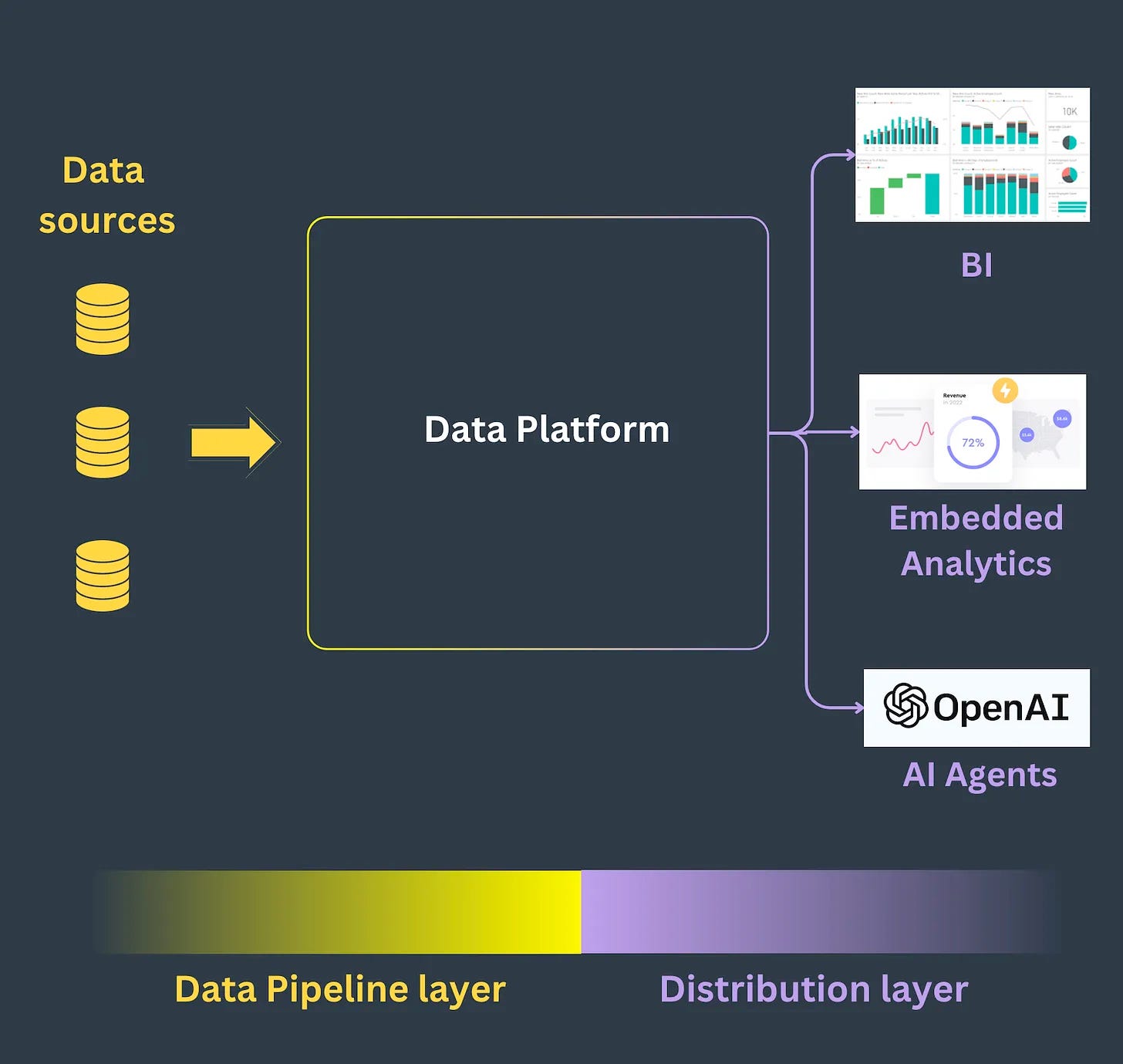

The distribution layer of a stack is quite challenging: from BI dashboards to embedded analytics and, recently, AI agents, data needs to be distributed for many use cases.

These use cases stand out based on several aspects:

They have different latency requirements (as addressed in a previous post).

They require different modeling approaches.

They support consumers with different skill sets.

Let's consider an example of an e-commerce company with the following user profiles:

Clara, the company's CEO, requires her figures to be updated every Monday at 8 am. She can build her dashboard in Tableau but needs help writing SQL and does not have time for data manipulation.

Jim, a Data Analyst for the marketing department, creates marketing campaigns using SQL directly in Snowflake. He works in his sandbox using dbt.

John, a Data Scientist, creates recommendation models. He usually works with unaggregated data to prepare his dataset. He needs to access many tables to run exploratory analyses and check possible correlations between dimensions.

All these users use the same data but via different tools, from different perspectives, and at various aggregation levels.

How is it usually done?

One way to address this challenge is by denormalizing the data and building one big table (OBT) containing all dimensions at the lowest aggregation level.

Once done, it’s possible to build specific models for each distribution use case:

For Clara: create a view that shows metrics like the number of sales per day for the last quarter.

For Jim: create another view that aggregates sales per customer segment.

For John: provide access to the row table directly.

This approach works very well for small-scale platforms: the data platform team owns the models, maintains control over them, and updates them as requirements evolve.

However, what typically occurs as the platform expands? What happens when more users come on board with additional use cases?

Limitations of this approach

The previous paragraph should have given a clear enough idea about the intricacies of modeling data for different use cases.

When an organization expands, it’s easy to see that data democratization can get out of hand quickly, leading to a sprawl of models that would make the most formidable analytics engineers recoil in terror.

You'd be way off track if you thought the above picture was a snapshot of our local star cluster.

Instead, it is a 3D representation of a fraction of GitLab’s dbt DAG. The complete picture was too large to include, but you can find the original here.

To add fuel to the fire, this talk from dbt’s CEO Tristan Handy shows that many dbt projects have over 1000 models, with 5% of them having over 5000!

The situation is similar to when a company adopts any BI solution. It starts with a few general-purpose dashboards, but everyone and their dog have a slightly different opinion on how data should be represented.

Soon, they are drowning in a swamp of unnecessary dashboards and reports no one wants to take responsibility for. Sounds familiar?

Unfortunately, there is a tradeoff between flexibility and business meaning in the world of data.

The more normalized your data is, the more flexibility you gain, but it loses meaning.

The opposite is also true - the more you specialize your data (via denormalization and aggregations), the more meaning it gains, but it loses flexibility. So, to answer two slightly different questions, you need to create two charts, two models, two reports, two dashboards… Growing to an unreasonable amount of data products becomes easy without proper guardrails.

Is swimming in a sea of “One Big Tables” our unavoidable destiny, or is there a better way?

The case for the semantic layer

The simplest definition of “semantics” is: “the study of meanings”.

Meanings are fundamental to collaboration: the moment a concept becomes ambiguous or loses meaning, problems arise.

We are getting a bit philosophical here, but bear with us.

Think about the concept of “customer”. Easy to define, right? A customer is an individual who purchased something. Ok.

When?

Is a person who bought something one year ago still a customer? What if they were subscribers to your service for two years and just last month they canceled the subscription? What are they? Maybe they went on vacation for a month and will renew the subscription as they return home. Do you know?

As you can see, getting lost in a sea of definitions and interpretations is very easy. And this is where you stop and say, “ok guys, the situation is getting out of hand. We need a centralized approach”. And this is what the semantic layer is.

When implementing a semantic layer, you agree with the other stakeholders about the definition and meaning of things. With a centralized source of truth, the two immediate gains are:

No more guesswork and ambiguity

A heavy reduction in model sprawl

Now, “semantic layer” might seem like another buzzword created ad-hoc to stir FOMO and make you buy tools you don’t need, but in reality, it’s an old concept, dating back to 1991 when SAP patented it.

Nowadays, several players are in this game. One of the early movers is Cube, our favorite, as one can plug it into nearly any data stack. Check out this video for a quick introduction to how Cube works.

Dbt labs themselves, in 2022, launched their first version of the semantic layer, available to anybody with a dbt Cloud subscription. In 2024, they released a new version and made it generally available.

Google Trends shows a growing interest in this concept since 2022.

It’s evident how crucial the semantic layer is becoming in the Modern Data Stack and how this trend might become a staple, when most businesses will want to bring clarity to their data and minimize ambiguity.

Thanks for reading and grazie mille Federico for this very nice colab’ !

-Ju

I would be grateful if you could help me to improve this newsletter. Don’t hesitate to share with me what you liked/disliked and the topic you would like to be tackled.

P.S. You can reply to this email; it will get to me.

Not sure if you've seen this yet but I really like this take (from creator of Airflow + Superset) that encourages moving the semantic layer more upstream into the modeling layer: https://preset.io/blog/introducing-entity-centric-data-modeling-for-analytics/

I can't imagine that these 5000 model dbt projects are optimal!