Bonjour!

I'm Julien, freelance data engineer based in Geneva 🇨🇭.

Every week, I research and share ideas about the data engineering craft.

Not subscribed yet?

We met with Paul at the end of last year and chatted about our shared interests in data apps and the declarative data stack.

Paul is building an exciting open-source project called Big Functions.

He proposed supporting one of my posts, which I gladly accepted as I've been eager to explore this “Warehouse on steroids” topic for some time.

The days of simply dumping data into a warehouse and layering dashboards on top are slowly ending.

Data analysts aren’t just reporting anymore—they want to drive action.

That’s why OLAP systems are evolving from passive storage to action-driven platforms.

Now, analysts want to use their SQL models to:

• Trigger workflows

• Automate decisions

• Interact with external systems

Let’s explore how to give our data stacks this superpower.

DuckDB

Let’s start with DuckDB.

As you may already know, DuckDB can be enhanced with extensions.

Extensions can either be:

• Packaged into DuckDB Core

• Installed manually, like this:

INSTALL spatial;

LOAD spatial;While extensions are a natural approach to adding new functionalities to a core system, building one for DuckDB means writing C++ code…

But what if one extension could connect DuckDB to any system?

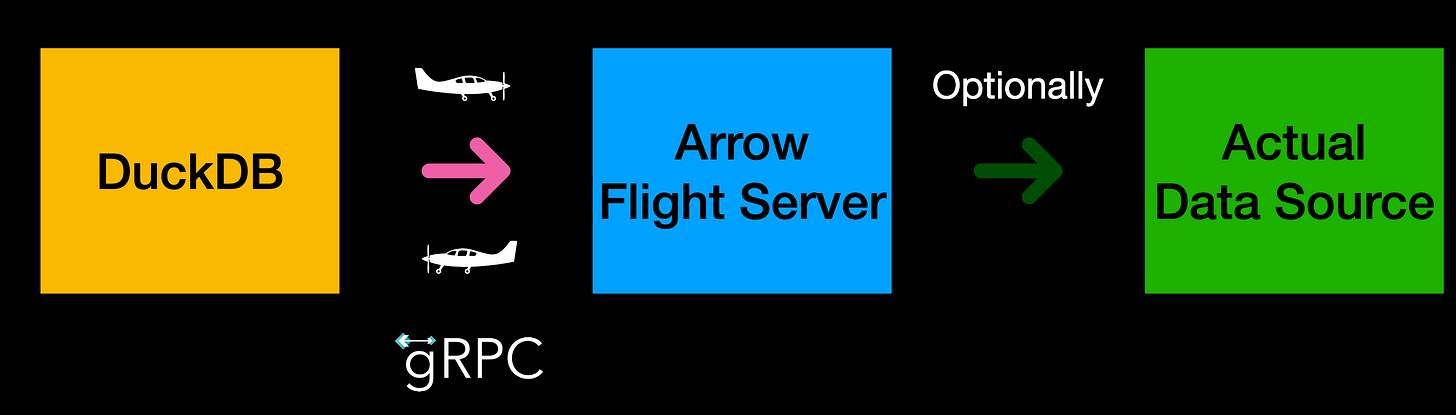

At the last DuckDB Conf, Rusty Conover presented the duckdb-airport extension.

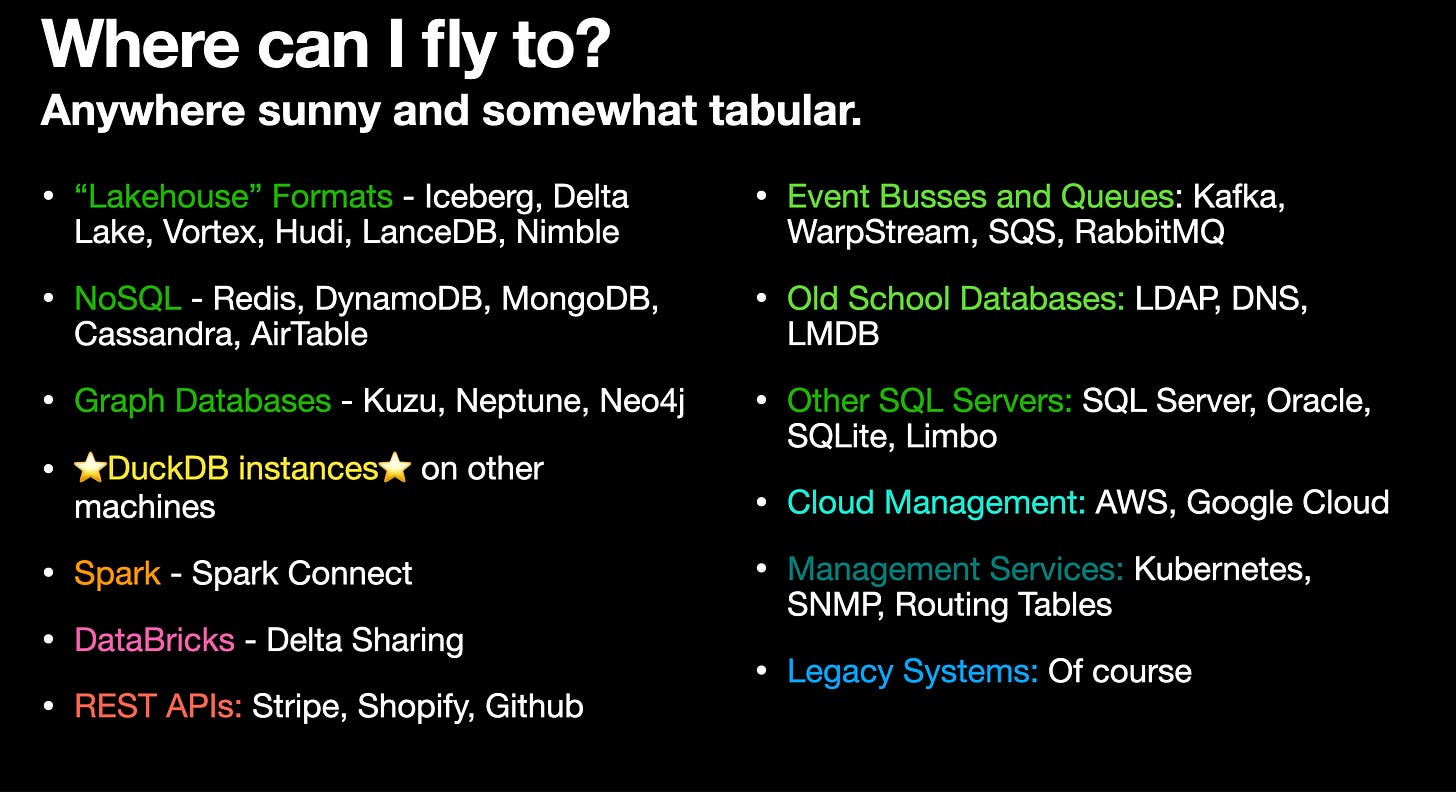

This extension connects DuckDB to an Arrow Flight server, which can run arbitrary Python code or connect to any system.

This allows you to extend DuckDB with any code—without needing to write C++.

It’s still very early (the extension is not yet listed) but has promising potential.

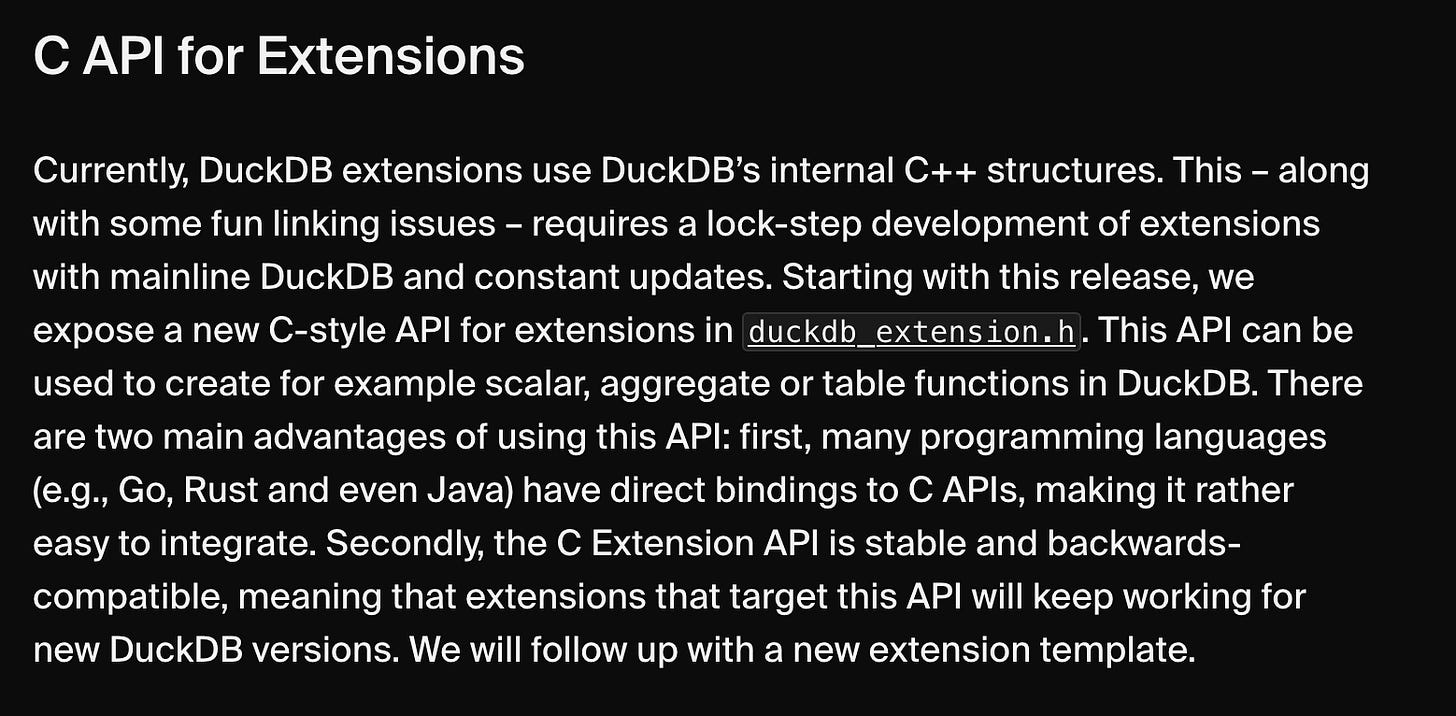

Note: The DuckDB team is actively working on improving extension development. At the beginning of this month, they released a C API to facilitate this process:

Snowflake + Snowpark

Now, let’s see what big cloud warehouse providers like Snowflake offer.

Snowflake is heavily SQL-centric—all features are packaged as SQL functions you call directly within queries.

However, interacting with external systems often requires making API requests.

And API requests are much easier to handle in Python…

To support this, Snowflake provides a Python API called Snowpark.

While it’s marketed as a Spark equivalent within Snowflake, I believe its real superpower is acting as Snowflake’s version of AWS Lambda (at the other end of the compute spectrum 😊).

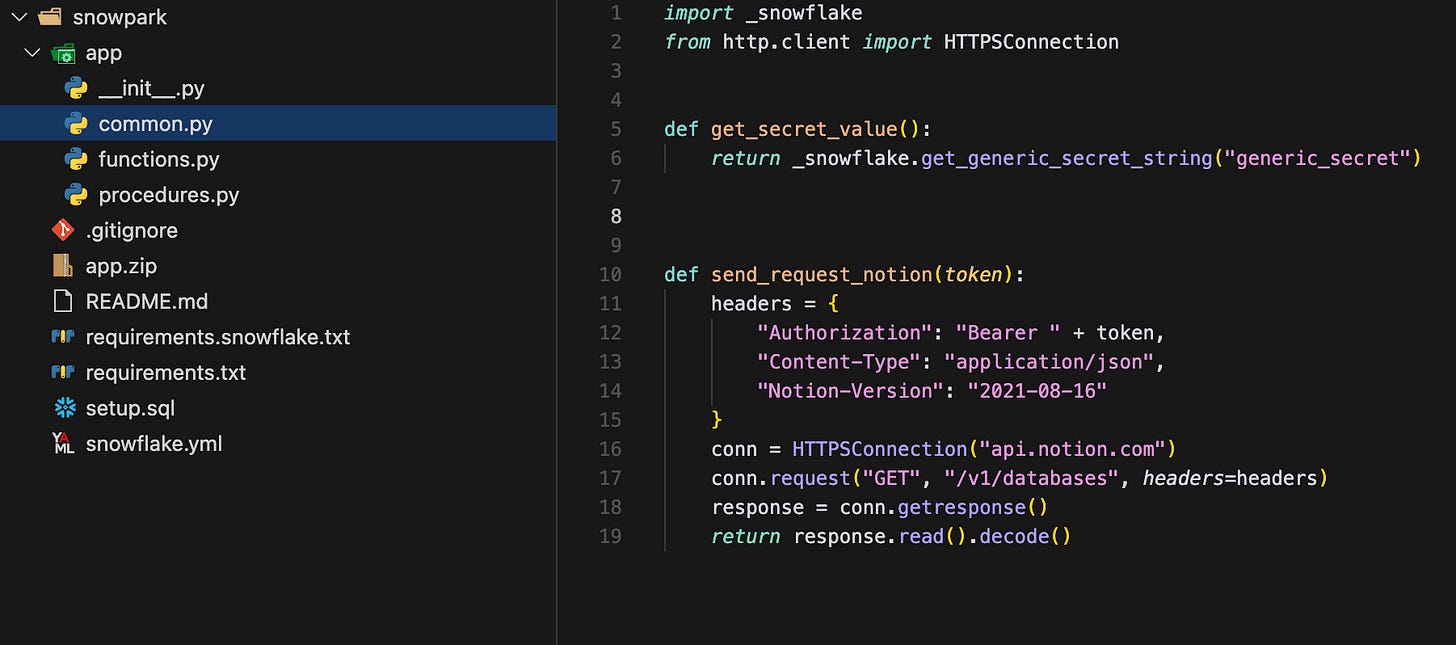

With Snowpark, you can quickly build custom SQL functions:

snow init snowpark --template snowpark_with_external_access

snow build

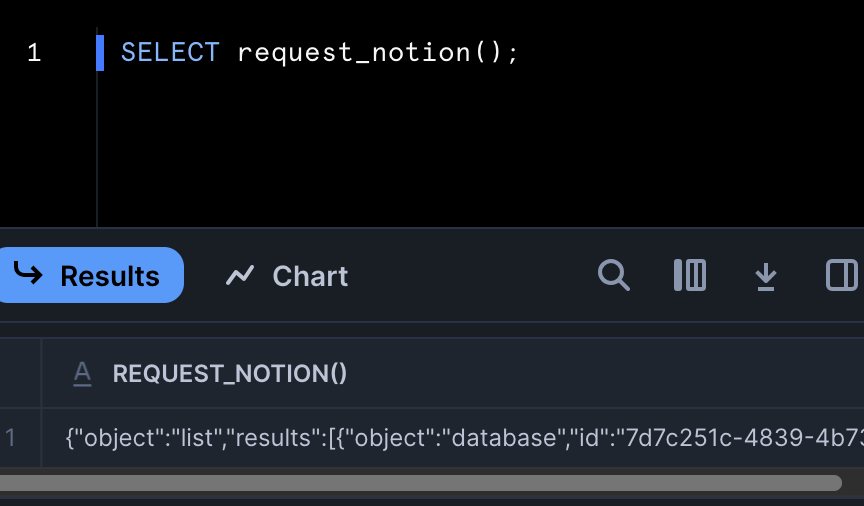

snow deploy In just 10 minutes, I was able to create a request_notion() function that lists all my Notion databases.

And even better: once your Python code is packaged inside an SQL function, you can integrate it as a post-hook in your dbt models. 🚀

{{ config(

post_hook="SELECT YOUR_CUSTOM_SNOWPARK_FUNCTION();",

) }}

select ...BigQuery + BigFunctions

Let’s move on to BigQuery.

BigQuery now offers a feature similar to Snowpark in Snowflake.

However, Paul took it a step further with BigFunctions.

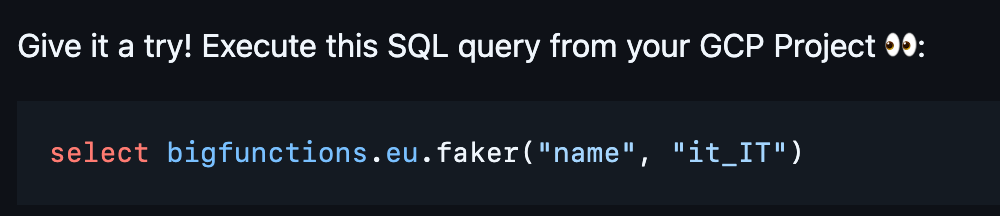

He created a collection of custom SQL functions that you can use out of the box without any installation.

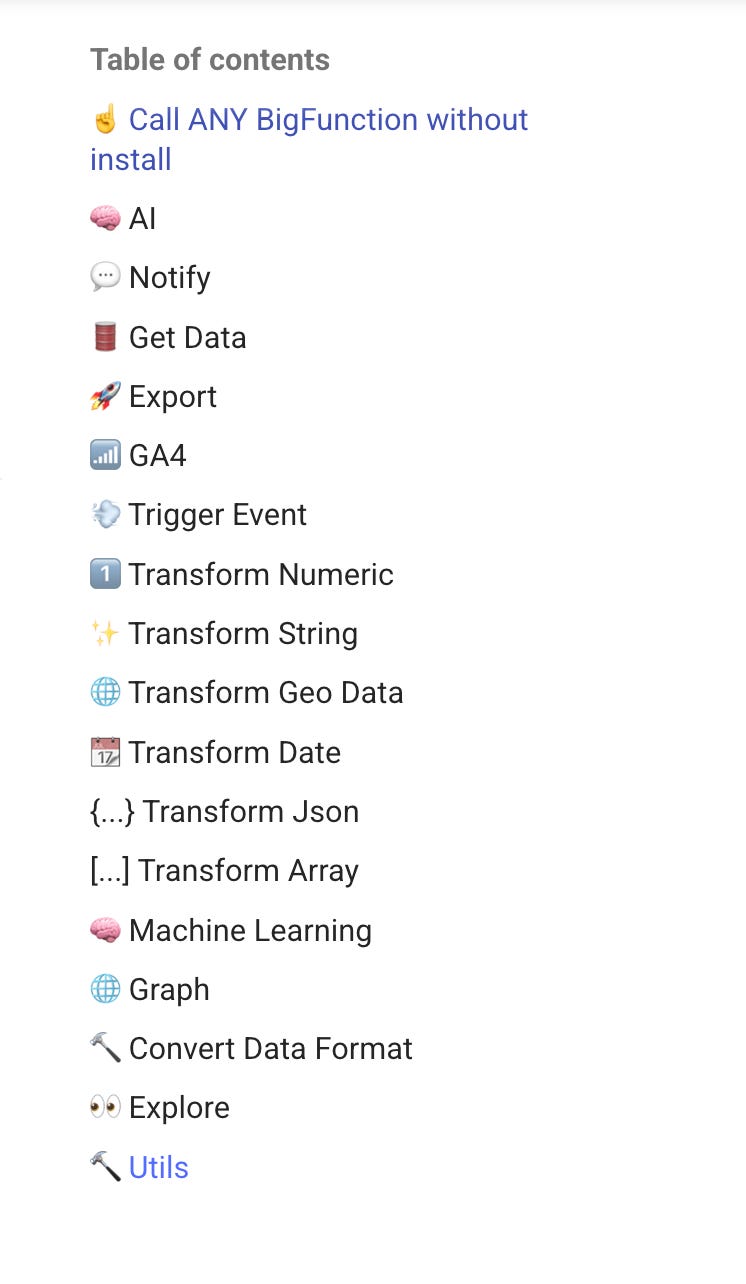

These functions cover the following categories.

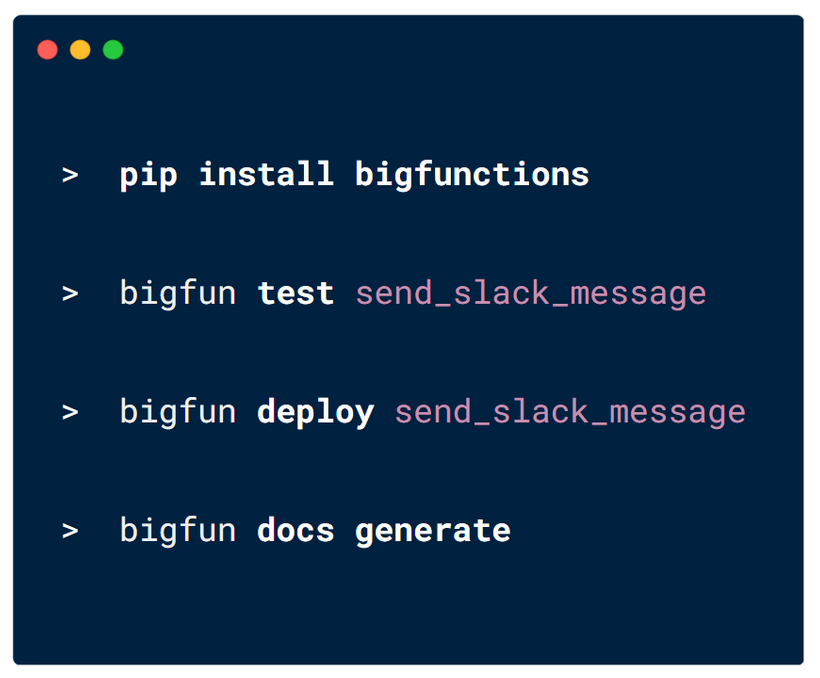

And if you want to build your own function, you can use BigFunctions’ CLI:

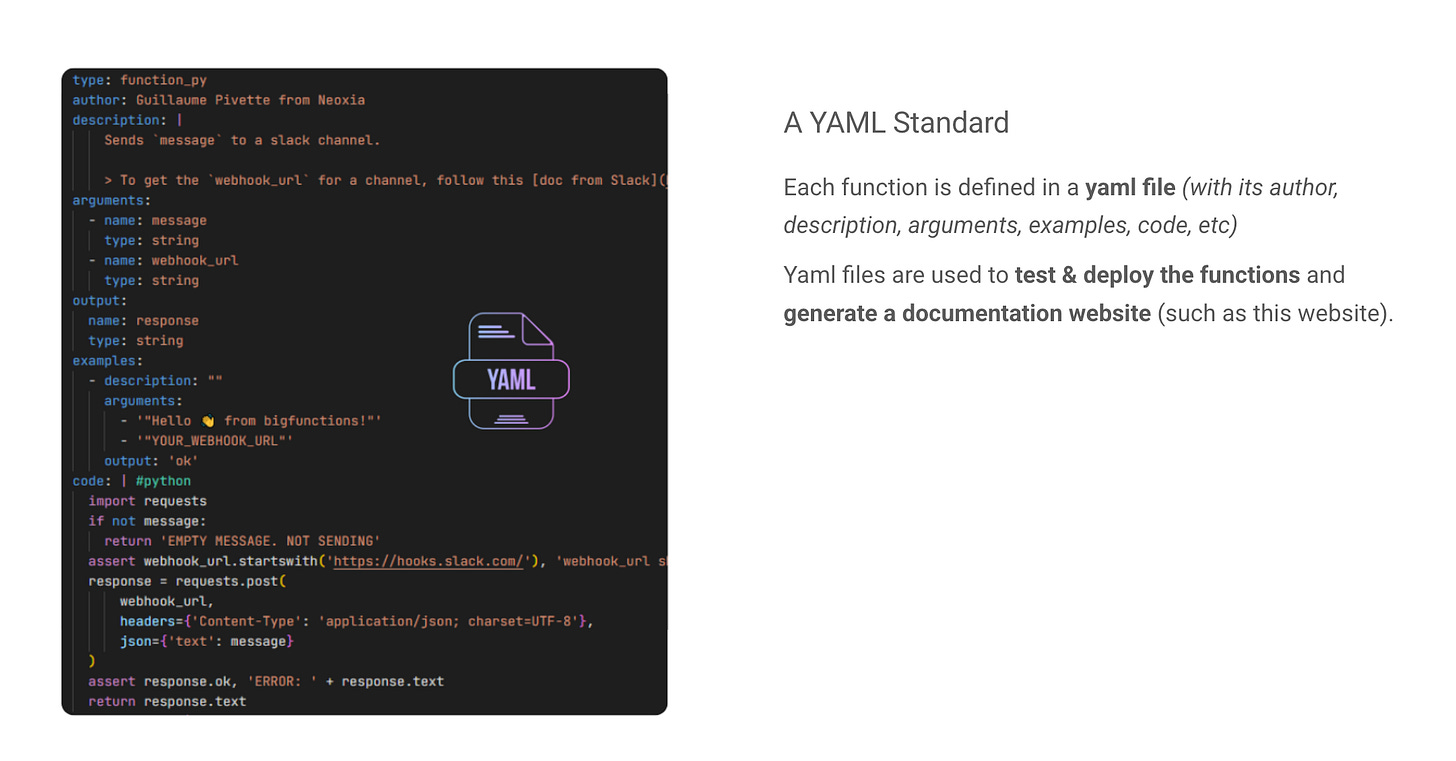

Each function is defined in a YAML file that embeds the function code and deploys it to:

Google Cloud Run for Python

BigQuery for JS/SQL

Here are some of the most-used BigFunctions functions today:

• send_mail()

• get() – Fetch data from an API

• ask_ai() – Call an AI model

• exchange_rate() – Retrieve currency exchange rates

• faker() – Generate fake data

• send_slack_message() – Send messages to Slack

Paul, who works at Nickel, a French fintech, has integrated BigFunctions extensively into their workflows.

They use it to:

create Zendesk tickets

send data to Salesforce

send messages to Pub/Sub topics and handle client communications

send data to regulatory authorities

The best part?

Their data analysts can build these workflows end to end with dbt by triggering BigFunctions directly within their models.

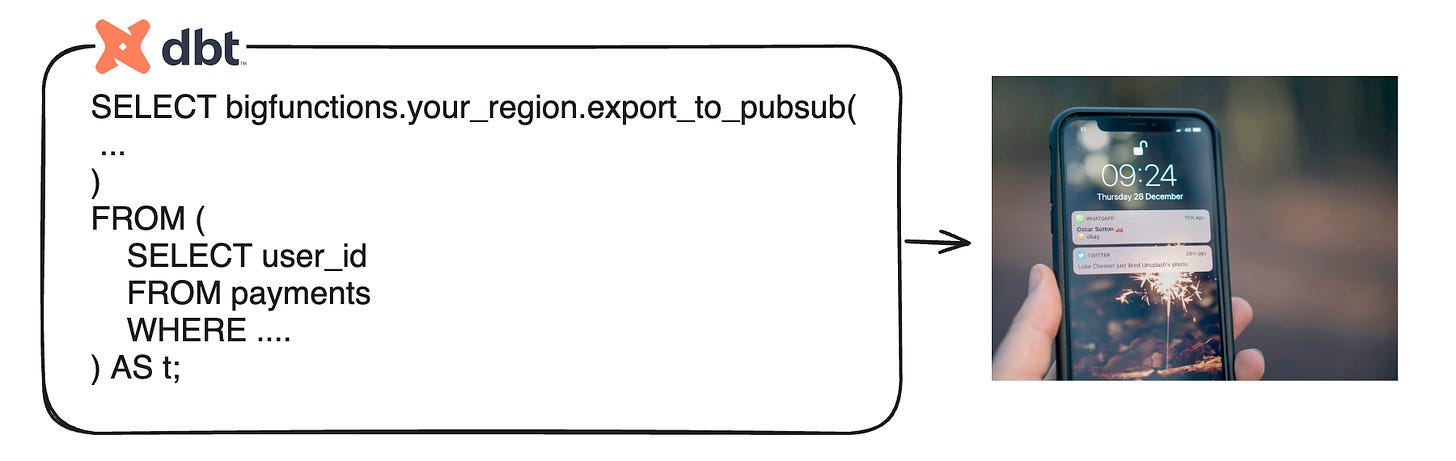

For example, if a user needs to receive a notification when a specific payment sequence of events occurs:

1. The sequence is calculated in a dbt model written by the analyst.

2. When materialization detects the pattern, the export_to_pubsub() function automatically triggers a notification on the user’s device.

No engineers are needed in the middle!

This is what makes this model so powerful—analysts can ship super fast:

• Analysts become fully autonomous

• They can ship new features independently

• Development cycles become faster than ever

Ultimately, this leads to more innovation and directly boosts the data team’s ROI.

Building a data stack is hard—too many moving pieces, too little time.

That’s where Boring Data comes in.

I’ve created a data stack onboarding package that combines ready-to-use templates with hand-on workshops, empowering your team to quickly and confidently build your stack.

Interested?

Check out boringdata.io or reply to this email—I’d be happy to walk you through our templates and workshops.

Thanks for reading.

-Ju

Hi, thanks for sharing. Quite interesting. You emphasize analysts autonomy. But don’t you think there’s a similar risk as with the self-service BI? At some point all those actions without governance, standard practices (like CI/CD) knowledge sharing might backfire (and be dumped to the “not needed” engineers?)?