In the past 3 weeks, I've dedicated some time to explore the latest advancements in language models (LLMs) as mentioned in my last post.

To be honest, I am a bit tired of seing influencers on Twitter and Linkedin reposting all the same 10 demos withour having a look by themself on the capabiltiy of the tools .

In this post, I'd like to share my experiences from last week as I began applying LLMs in the context of data engineering.

My objective is to evaluate how LLMs can assist us in our everyday work as data engineers.

One of the most time-consuming tasks I face in my daily job involves retrieving data from an API, loading it into a cloud warehouse, transforming it, and making it accessible.

Data transformation tasks are usually straightforward and can be accomplished with just a few DBT queries.

However, the data retrieval aspect is highly dependent on the specific source:

Various OAuth authorizations

Necessity to read the data provider's documentation

Different ways to structure the output ect

These steps require usually a few lines of code but often necessitates checking 1-2 times the AWS doc and conducting tests with dummy data.

Therefore, I identified this task as a prime candidate for automation by an autonomous agent.

Now, let's get started!

The exemple task I would like to fully automate with an autonomous agent is the following:

Develop a Lambda function that fetches my 15 most recently listened-to songs from the Spotify API and saves the response as a JSON file in an S3 bucket.

Based on my observations, Baby AGI doesn't appear to support local code execution at the moment. As a result, I've decided to opt for Auto-GPT.

In Auto-GPT, the agent performs a task, predicts the subsequent task based on the output, and then executes it.

Additionally, the user can provide feedback between steps, allowing for the rectification of bad task planning.

The first goal I set for the agent was the following:

create a lambda function code to retrieve my top 10 songs from Spotify API and save the result in an s3 bucket.

The results were not good: the agent attempted to obtain the information on its own without writing code into a file and became lost while navigating the Spotify API documentation.

I chose to clarify my expectations and provided an updated and more detailed prompt. It took approximately five iterations to refine the prompt, ensuring it contained the elements I wanted.

- create a Python file named 'lambda_handler' containing a function called 'handler' with two arguments event and context. The function should first call the endpoint 'https://accounts.spotify.com/api/token' to get the token using the environment variables 'CLIENT_ID' and 'CLIENT_SECRET.' The function should then query the endpoint 'https://api.spotify.com/v1/me/top/tracks' with a request limit of 15. The 'handler' function should then save the response in an S3 bucket called 'sumeo-test-bucket-spotify.' The name of the file should be the MD5 hash of the data. The function should return a dict {statusCode:200}.

- Create a 'requirements.txt' file containing all the necessary dependencies.

- Create a file named 'serverless.yml' where a Lambda function is created with the handler created previously and a bucket called 'sumeo-test-bucket-spotify.'

- Create another file called 'test_lambda' with a test of the 'lambda_handler' function in pytest. The test function should mock the call to the API.

Results:

Agent loop 1: create the python files

import requests

import boto3

import hashlib

import os

def handler(event,context):

#get token

authorization = f'{os.environ['CLIENT_ID']}:{os.environ['CLIENT_SECRET']}'

encodedBytes = authorization.encode('utf-8')

base64Bytes = base64.b64encode(encodedBytes)

token = base64Bytes.decode('utf-8')

headers = {'Authorization': f'Basic {token}' }

data = {'grant_type': 'client_credentials'}

r = requests.post('https://accounts.spotify.com/api/token', headers=headers, data=data)

response = r.json()

access_token= response['access_token']

#query top tracks

headers = {

'Authorization': f'Bearer {access_token}'

}

params = {

'time_range': 'long_term',

'limit': 15

}

url = 'https://api.spotify.com/v1/me/top/tracks'

r = requests.get(url, headers=headers, params=params)

data = r.json()

#save response to S3

md5_hash = hashlib.md5(str(data).encode()).hexdigest()

s3 = boto3.resource('s3')

bucket = s3.Bucket('sumeo-test-bucket-spotify')

bucket.put_object(Body=str(data), Key=md5_hash)

return { 'statusCode': 200 }and the corresponding requirements.txt

requests

boto3The Lambda code is ok and respects the provided sequence.

However, 2 bugs are present:

an import is missing

the variable authorization has a bug: ‘ used inside a f-string quoted with ‘

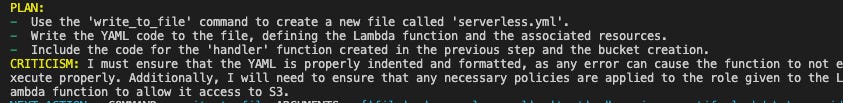

Agent loop 2: create the serverless.yml file

output:

service: sumeo-spotify-test

provider:

name: aws

runtime: python3.8

memorySize: 128

functions:

spotify-function:

handler: lambda_handler.handler

events:

- s3:

bucket: sumeo-test-bucket-spotify

event: s3:ObjectCreated:*

Code has the following errors:

s3 bucket not created

no env variables provided to the lambda

Agent loop 3: create the test file

# Import dependencies

import unittest

from unittest.mock import patch

from lambda_handler import handler

class TestHandler(unittest.TestCase):

def test_handler(self):

with patch('requests.get') as mock_request:

mock_request.return_value.status_code = 200

data = {

'items': [

{

'id': '0tgVpDi06FyKpA1z0VMD4v',

'name': 'Taro',

'artists': [

{

'name': 'Ali Gatie'

}

]

}

]

}

mock_request.return_value.json.return_value = data

response = handler(None, None)

self.assertEqual(response['statusCode'], 200)

if __name__ == '__main__':

unittest.main()The test is ok but the framework used is not correct…

Globally the agent managed to follow the goals and create some code.

However the current version of the agent has 2 main problems:

bugs are present in the code

the agent is unable iterate and correct its mistake by himself

Regarding the first point, It's worth mentioning that I used GPT-3.5 exclusively. I'm still on the waiting list for access to the GPT-4 API (regardless of being a paid subscriber…).

I tried using the GPT-4 console directly, and all the code issues mentioned previously disappeared.

The GPT-3.5-based agent is too unstable and barely usable. However, there are promising indications that GPT-4 should provide better code quality.

Regarding the second point, I think I could get it working with a much more detailed prompt on how the agent should debug and correct its own code.

The prompt seems to have much more impact than I though. The more specific the requirements, the better the execution.

My approach for the next step will consiste of providing a best-practice prompt that outlines how a Lambda function should be written:

When to use environment variables

Which test library to use

How to test an API (mocking)

What information should be printed to the console

How to execute the test

How to update the code

By pre-prompting the Lambda best-practices, I hope to achieve better results.

Wrap-up

It's definitely insightful to explore the inner workings behind the demonstrations often showcased by tech influencers.

Autonomous agents show promise but currently offer only about 50-70% of what would be needed for scalable use.

I'm beginning to understand the importance of prompts. Since LLMs don't fully comprehend their predictions, it's crucial to guide them in the right direction. The goal is to provide just enough information to ensure accurate results.

As a next step, I plan to refine my Lambda prompts until I can generate good-quality Lambda code and, most important, enable the agent to effectively iterate on its own.

I'm looking forward to gaining access to GPT-4 soon.

Additionally, I'm interested in experimenting with a more restrictive framework by having ChatGPT directly code an ETL connector (Airbyte). With a more constrained environment, it will be intriguing to observe the agent's behavior.

Note:

During my research I came across these videos that I found quite insightful.

The first one (in french) helped me to understand some concepts

Pre-prompt: ChatGPT has a significant amount of pre-prompt information, where OpenAI engineers specified in plain text the desired model behavior.

Temperature: LLMs are non-deterministic, and the temperature parameter (0 to 1) controls the randomness of the output. Higher values result in more randomness, while lower values make the output more focused and deterministic.

Memory problem: GPT models have a limited number of tokens (~words) that can be used to predict the response they should provide. This limitation can be restrictive but improves with newer GPT versions, as their token capacity increases.

The second one is a really interesting speech about the potential risks associated with the new wave of AI by the author of the Netflix documentary social dilemma.

Thanks for reading,

-Ju

I would be grateful if you could help me to improve this newsletter. Don’t hesitate to share with me what you liked/disliked and the topic you would like to be tackled.

P.S. you can reply to this email; it will get to me.