Iceberg + Single Node Engines

Ju Data Engineering Weekly - Ep 75

Bonjour!

I'm Julien, freelance data engineer based in Geneva 🇨🇭.

Every week, I research and share ideas about the data engineering craft.

Not subscribed yet?

I wanted to enhance the newsletter experience by sharing the song I’m listening to while writing this piece.

Please put on your headset, crank up the volume, and let’s go!

The compute category has evolved a lot these past years

We started with warehouses where compute and storage were tightly coupled.

Next, we transitioned to solutions with separate compute and storage within the same provider.

Now, we are adopting fully decoupled storage using Iceberg.

The most significant advantage of this transition is the ease of integrating single-node engines (like DuckDB, Polars, and others) with the rest of the stack.

Previously, taking parts of a pipeline outside the warehouse required a separate ETL to move data around.

Building and maintaining this ETL often killed the ROI of using cheaper compute.

With Iceberg as a bridge, we can now run workflows on different engines without copying data.

I’ve been considering how to integrate these engines into a pipeline over the past few weeks.

Let’s explore some potential approaches.

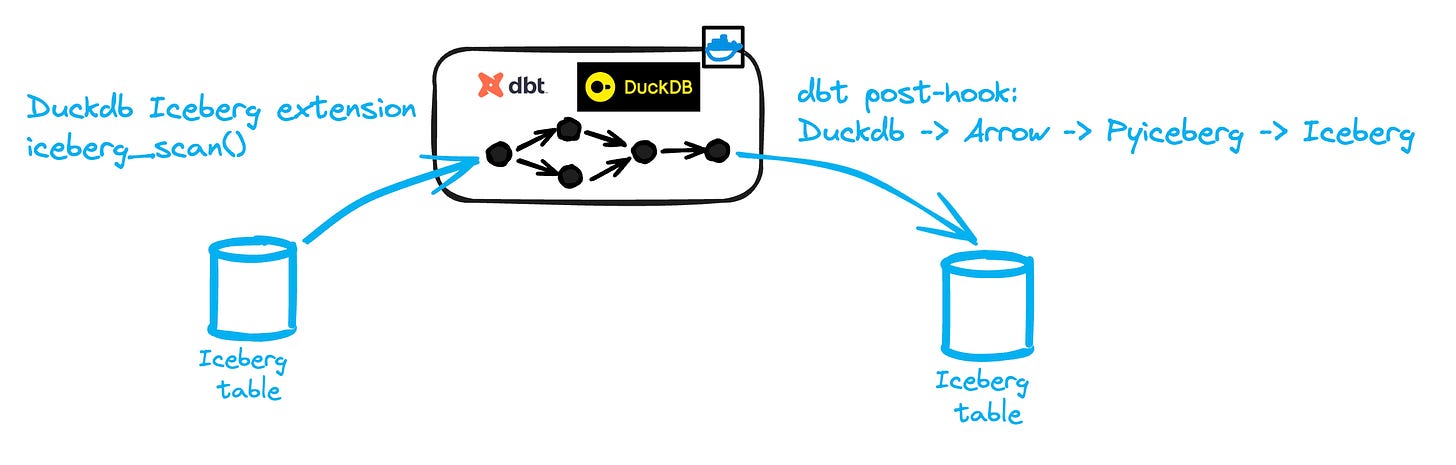

Option 1: one project = one worker

This is the most straightforward approach: run your entire dbt project in a single Docker container using DuckDB as the sole engine.

The workflow looks like this:

1. dbt sources are read via the DuckDB Iceberg extension

2. The dbt DAG is executed with DuckDB as the engine.

3. Target tables are materialized to Iceberg using PyIceberg.

Pros:

• Simplicity

• Efficient data passing across models

Cons:

• All models must fit in the instance’s memory

• No materialization of intermediate models

Option 2: one model = one worker

In this model, each transformation is handled by a separate worker.

This worker loads the source table into memory, performs the query, and writes the results to a destination Iceberg table.

The code using DuckDB would look like this:

Instead of running a full materialization, we could switch to an incremental load.

However, PyIceberg isn’t yet optimized for MERGE INTO operations.

Currently, the only option is to use OVERWRITE with an overwrite_filter matching updated rows, but I’ve experienced performance degradation with this method.

Pros:

• All models are materialized to Iceberg.

• You can control the resources used by each model (instance size).

Cons:

• It only works as long as table fits in worker’s memory.

• Inefficient incremental models.

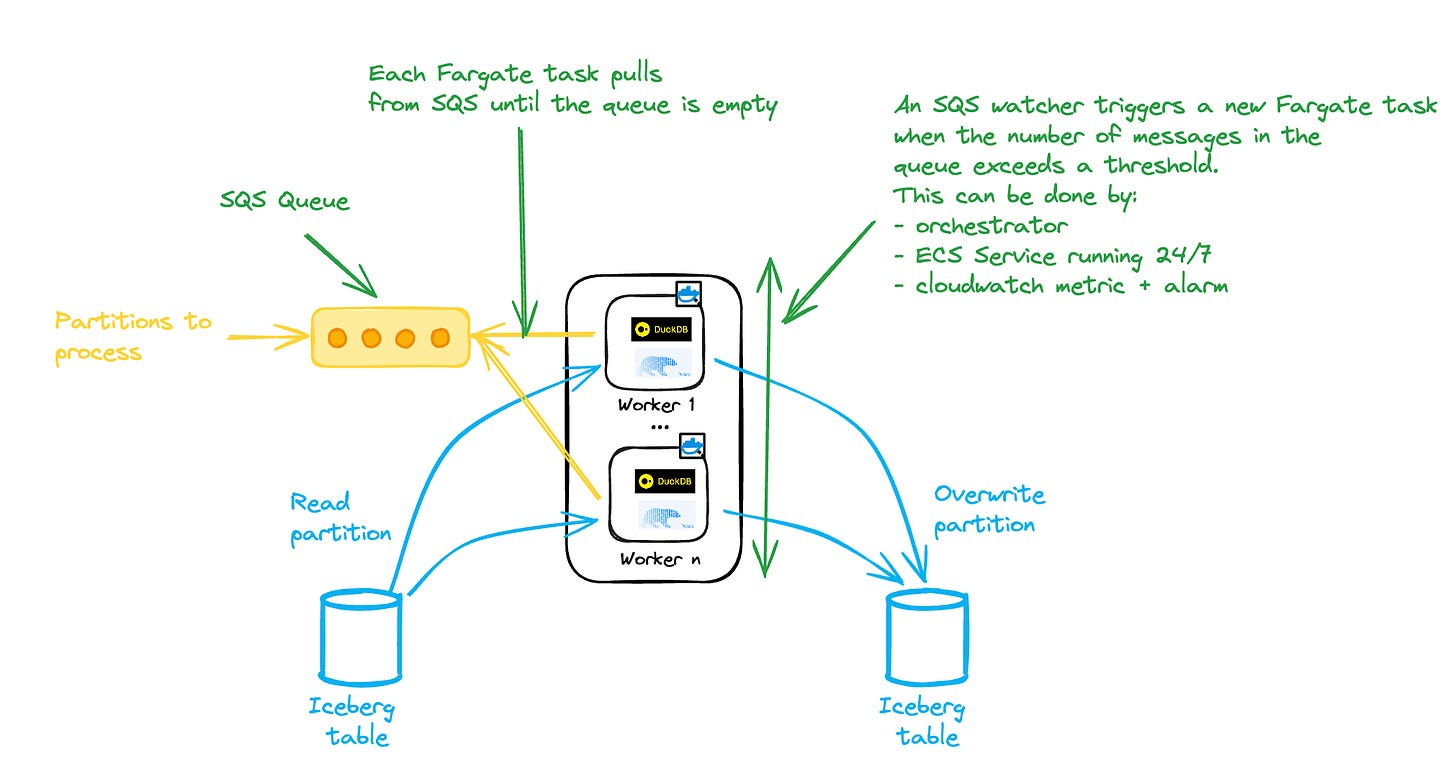

Option 3: one partition = one worker

One possible approach to counter the abovementioned limitations is to work at the partition level.

I discussed this approach in a previous article:

The idea is to divide a dataset into partitions that exist independently.

Each partition is immutable and consistently overwritten, avoiding the row-level limitations of PyIceberg discussed earlier.

Each worker is then sized to handle a single partition.

Add more workers to process additional partitions for large backfills or initial loads—there’s no need for a particular ‘large’ instance.

Limiting the number of concurrent workers can be beneficial in this setup, as too many can lead to excessive write conflicts and retries.

This can be achieved by adding a buffer/queue from which each worker pulls partitions to process.

To boost throughput, you can then allocate more memory to a worker and process multiple partitions in a single run (one Iceberg commit).

You might ask: what if I want to perform cross-partition aggregations?

In that case, if a single worker isn’t large enough, our favorite data warehouses are here to help :)

Pros:

• Can handle large tables.

• Offers better control over worker sizing as the tables grow.

• Capable of handling large backfills and continuous loads with the same setup.

Cons:

• Requires an orchestrator to track which partitions to process.

• Must control the number of concurrent writers.

AWS Implementation

If your partition processing fits within the Lambda limitations of 10GB and 15 minutes, you can directly leverage the SQS + Lambda pattern.

But that’s probably not the case, and you’ll probably need to move to ECS Fargate.

ECS Fargate is an AWS service that allows you to run containers in a serverless manner.

You can get large runners (up to 120GB, 16vpcu) that run containers hosted in ECR.

This comes with some limitations:

• Tasks must run in a VPC (a distinct IP is assigned to each task, so ensure enough IPs are available).

• Has a longer cold start time than Lambda (~ 1 min).

• Does not support SQS triggers.

Since there is no direct SQS to Fargate trigger, we need to implement an additional SQS-watching service that will start new tasks based on the SQS queue depth:

Orchestration

Ideally, we would like to use a tool like dbt to handle model lineage, but dbt does not currently support remote execution in an ECS task or Iceberg (for now).

Therefore, we must rely on an orchestrator to manage the various models.

This orchestrator needs to perform the following tasks for each model:

1: Get the partitions to update

2: Trigger the processing of each partition (ECS task) while controlling concurrency.

3: Wait for the processing of all the partitions

This can be done with various orchestrators:

Step function with Map + ECS RunTask state

Airflow with EcsRunTaskOperator + Dynamic Task Mapping

Dagster via PipesECSClient + partitioned asset

I’ll likely share a detailed implementation with one of these orchestrators in a future post.

I will also include a benchmark comparing the price and performance of this setup to their warehouse counterparts.

Stay tuned!

Building a data stack is hard—too many moving pieces, too little time.

That’s where Boring Data comes in.

I’ve created a data stack onboarding package that combines ready-to-use templates with hand-on workshops, empowering your team to quickly and confidently build your stack.

Interested?

Check out boringdata.io or reply to this email—I’d be happy to walk you through our templates and workshops.

Thanks for reading

-Ju