Data Eng. in 2025

Ju Data Engineering Weekly - Ep 79

Bonjour!

I'm Julien, freelance data engineer based in Geneva 🇨🇭.

Every week, I research and share ideas about the data engineering craft.

Not subscribed yet?

Thanks a lot for being a subscriber to this newsletter.

Where I’m from in France, people say we can wish a Happy New Year until January 15th.

So, I guess there’s still room for (yet another…) post about 2025 predictions!

This post will be a bit shorter than usual and will cover some of the developments I see for this year in data engineering.

Let’s go!

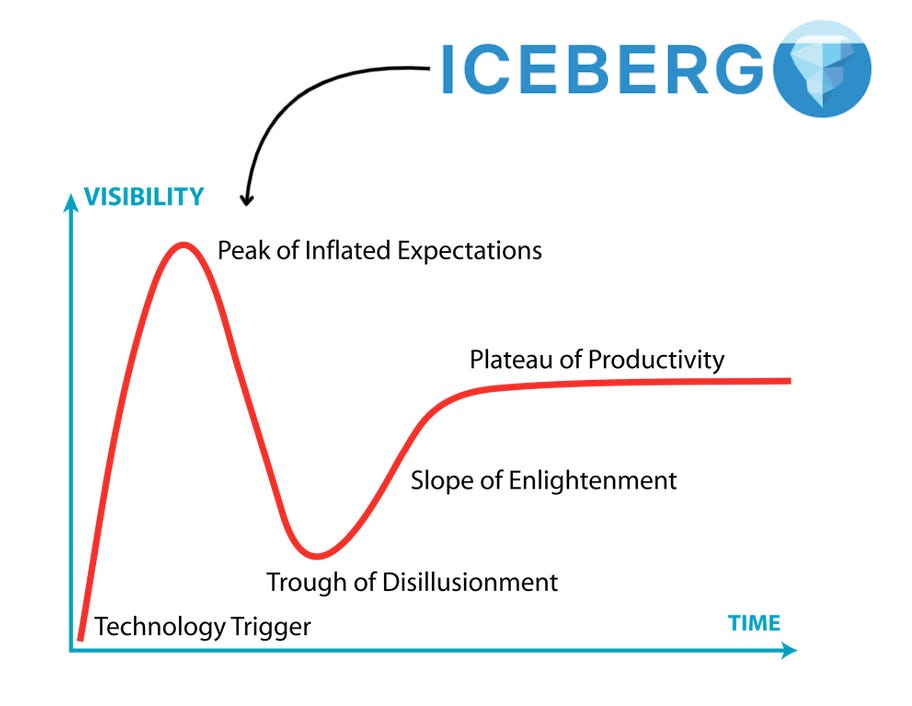

Iceberg: from Peak to Plateau

Of course, Iceberg..

In 2024, we saw the explosion of table formats, particularly Iceberg.

This was driven by major providers who have all built their integrations: Snowflake, BigQuery, AWS with S3 Tables.

On paper, the premise of Iceberg and other table formats is super exciting: no more ETL to your warehouse.

This unlocks so many use cases, especially in terms of cost reduction:

But!

Anyone working with Iceberg will quickly notice that the onboarding process is still chaotic: catalog creation, object naming, read/write compatibilities.

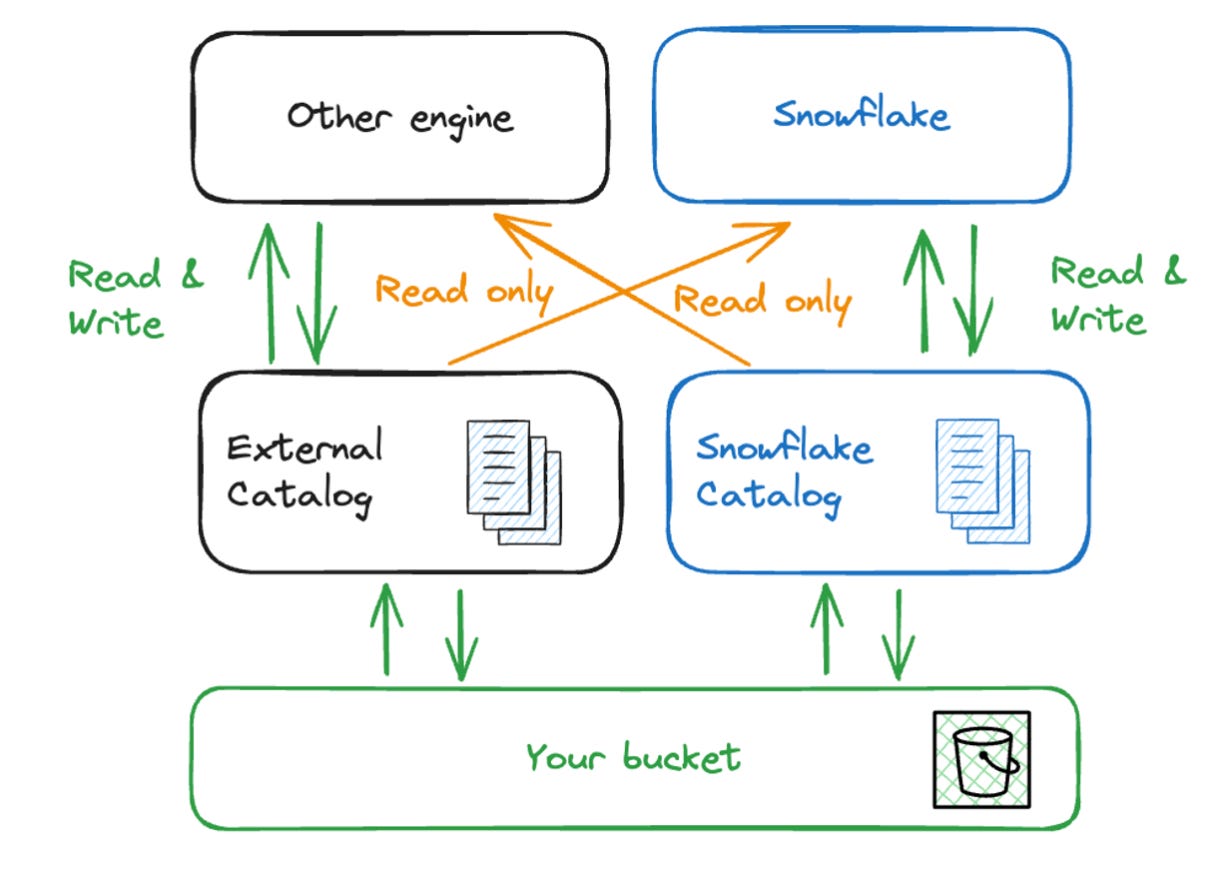

For instance, big providers seem to be converging on the following integration model:

• Closed Iceberg tables managed by themselves

• Read-only access to tables managed by other providers

This is very confusing for the user, especially given the endless list of names providers use for the same objects:

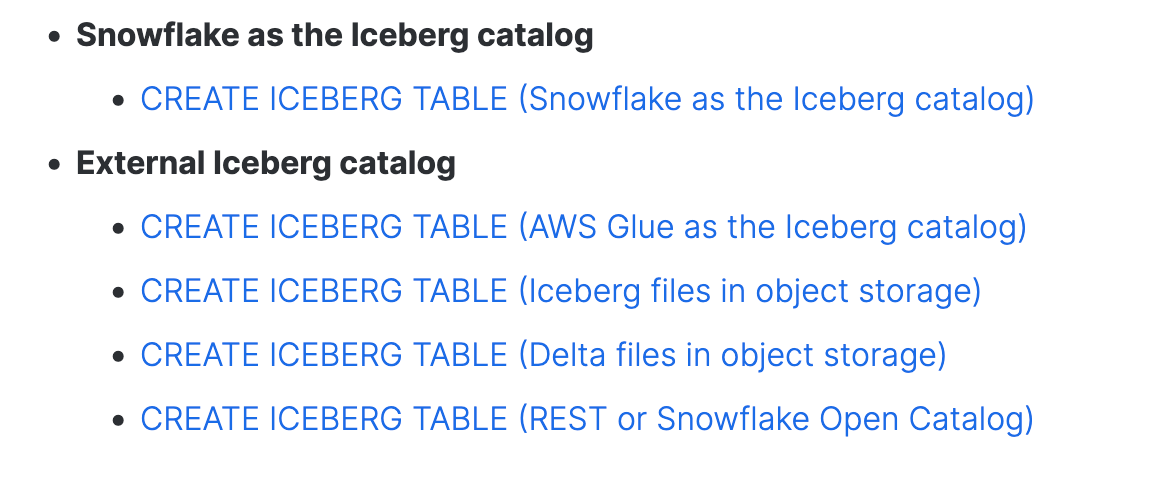

Snowflake has “Iceberg tables” with many variations:

AWS has S3 Tables.

My (unrealistic) hope for 2025 is that providers will adopt a single, truly open (read/write) Iceberg table standard.

Furthermore, Iceberg is still largely JVM-oriented.

The apache/iceberg repo is a Java reference implementation that includes Spark, Flink, Hive, and Pig modules.

Other implementations extend Iceberg’s compatibility beyond the JVM:

Go: iceberg-go

Rust: iceberg-rust

PyIceberg (Python): iceberg-python

However, they still lag, and some key features are missing:

Hopefully, big providers will support these non-JVM Iceberg libraries to accelerate their development and close the gap with the Java version.

I think the excitement for Iceberg will continue in 2025 but may slow until integrations mature enough to offer a good experience for non-JVM users.

Multi-engine stacks

With Iceberg becoming the new storage layer of our platform, it means users will want to mix and match engines together:

• Single nodes for pipelines

• Cloud warehouses for corporate-level governance

The driver is clear: cost savings.

People are tired of paying large vendors to process queries under 10-50GB.

We started exploring the feasibility of this kind of stack with Sung from SQLMesh:

We will probably repeat this experiment in the coming weeks.

As Iceberg gains traction, I believe SQL frameworks will implement native support for mixing engines in 2025.

Hopefully, this will become as simple as specifying a profile in a model configuration:

{{

config(

profile="duckdb" # or any engine configured in dbt_profiles.yml

)

}}Data Platform in a Box

Data platform costs are 10% storage, 20% compute, and 70% engineering.

So, the natural innovation path for startups is to target the 70% of this “cake.”

One approach I’m seeing more and more is the platform-in-a-box model.

5X (post), Keboola, Y42, Mozart, and recently Bruin—many solutions are now available on the market.

They bundle existing open-source or custom tools into a single SaaS offering.

They all generally offer a usage-based pricing model, which is both their strength (low initial cost) and their weakness (expensive scaling).

I’m curious to see how these tools evolve in 2025 and to get user feedback after a few years of use and growing data volumes.

Cursor for Data Engineers

This is the elephant in the room when it comes to reducing the 70% mentioned above.

No, LLMs cannot write end-to-end pipelines at the moment…

But!

They excel at solving problems that require editing <20 lines of code within a narrow scope.

As data engineers, many of our daily tasks, especially maintenance, revolve around such problems.

I think in 2025, we will see tools (like Cursor for Data Engineering) emerging that will constrain LLMs to your data platform context with the motto:

80% of data pipelines can be standardized, and LLMs can automate 10% of the rest.

Cursor, Windsurf, and others are still too generic.

We need an IDE that limits the LLM to our platform’s context while letting it retrieve information through predefined tasks like logs, SQL queries, AWS CLI, etc.

I read extensively on this topic over Christmas, so I’ll likely dedicate a post to this in the coming weeks.

Building a data stack is hard—too many moving pieces, too little time.

That’s where Boring Data comes in.

I’ve created a data stack onboarding package that combines ready-to-use templates with hand-on workshops, empowering your team to quickly and confidently build your stack.

Interested?

Check out boringdata.io or reply to this email—I’d be happy to walk you through our templates and workshops.

Thanks for reading

Ju

On that last point, check this out:

Cursor + dlt = works well

https://dlthub.com/blog/mooncoon

but if you want to add context about your platform, anthropic MCP might be the direction to go

I'd add montara.io to the platform-in-a-box category with user-based flat pricing.

Since these tools don't incur the compute/storage costs, usage-based does not make sense.