pip install data-stack

Ju Data Engineering Weekly - Ep 54

Seamless user experience is at the heart of the value proposition for many startups:

Airbnb: frictionless room booking

Uber: frictionless taxi booking

Amazon: frictionless online shopping

This is now reaching the data industry.

Developer onboarding is becoming a key differentiator: data tools in 2024 are open-source and pip-installable.

In this blog post, I focus on exploring examples of such tools across various segments: ELTs, orchestrators, SQL engines, and BI.

All these tools follow a similar pattern: they prioritize the dev experience at the cost of limiting the range of use cases they support.

The Pip install data stack

In this image, I tried to assemble a list of pip-installable tools:

Ingestion

Airbyte and Meltano are well-known open-source tools in this area.

They offer significant capabilities but can be challenging to start using, especially if you're self-hosting or creating custom connectors.

A new wave of ELTs packaged as libraries is emerging with tools such as DLT and Cloudquery.

Even Airbyte has launched its initiative with Pyairbyte, where you can use some Airbyte connectors loaded from a Python library.

These tools position themselves between no-code ELTs and code-heavy approaches.

They are less powerful than their counterparts (fewer connectors, no dbt triggers, and no scheduler), but they are much lighter and easier to manipulate.

The competitive advantage of ELTs often lies in the variety of the connectors they offer.

However, no tool can cover every possible connection.

Lightweight tools have an advantage in this regard as they are easily interoperable.

This flexibility allows you to switch tools effortlessly if one isn't ideal for a specific data source: just add a line to your requirements.txt.

SQL Engines

The "pip wave" is also extending to the database segment with engines like DuckDB and CometDB.

This distribution channel makes them embeddable anywhere: in a notebook, standalone script, and even in the browser.

There's no need to manage a separate server for your database; everything runs where your code runs.

Here as well, lightweight and simplicity take precedence over scale: there's no need to manage a server, many integrations are available, and it can seamlessly interoperate with other frameworks.

These engines are single-node, so they are limited by the size of the instance they are running on. They won’t scale like their distributed counterparts: they are simply made for use-case when infinite scalability is not needed.

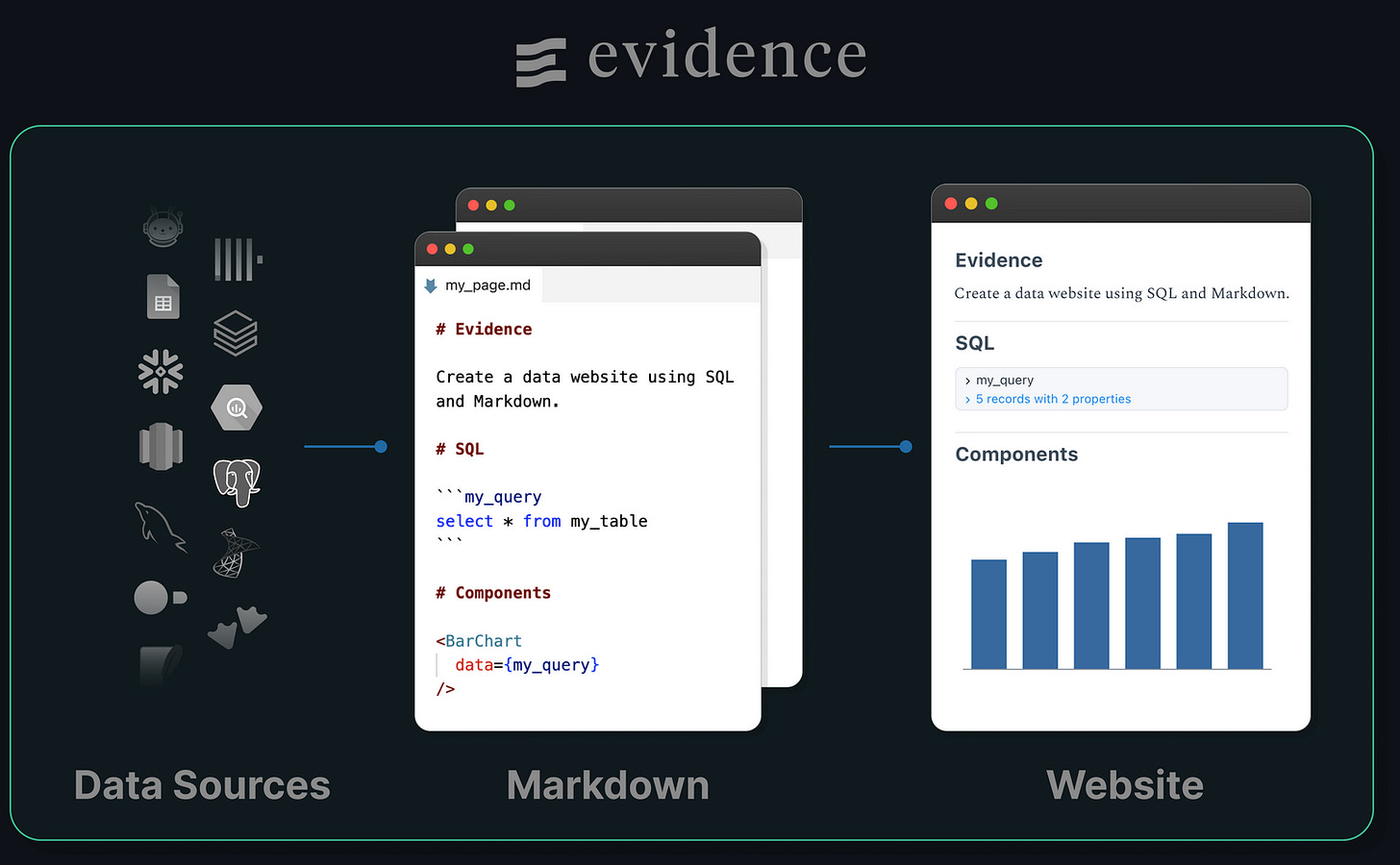

BI: BI as code

Traditional BI tools like Tableau can be cumbersome: multiple client interfaces (cloud/desktop), non-reproducible drag-and-drop dashboards, and lack of a test environment.

Newer tools are emerging with a different philosophy: npm installable and “as code”.

As for any “as-code” approach, it allows easy version management, testing in dev environments, and automatic deployment via a CI.

Additionally, they generate simple static web pages that you can self-host super easily (on S3?).

Here as well, the trade-off is clear: simplicity and lightness vs BI war machine.

Orchestration: run SQL transformations

dbt is the clear leader in this category.

While powerful, it can get a bit heavy to set up and maintain.

A new wave of even lighter tools is emerging.

SQLMesh, Lea and Yato are two such tools leveraging SQLGlot's SQL parsing capabilities that remove the need to manually write {{ ref() }} between models.

You simply provide them with a folder full of SQL models, and they automatically figure out the execution DAG.

Yato for instance loads a DuckDB backup file from S3, retrieves the SQL graph from SQLGlot, executes the query in DuckDB, and backs it up to S3.

The scope of Yato is voluntarily limited but sufficient for running many small data applications.

Instead of configuring a full dbt project for a simple data app, write SQL queries in a file, run your .py file, and the data is transformed and ready to be served.

Simple. Efficient.

What makes this popular?

Easy onboarding

While Docker is an excellent distribution channel, containers are still seen as a source of complexity by many.

In the end, data tools are like any other product: they should enable users to get started quickly and easily.

Those first moments using a tool, similar to a user's first impression of a webpage, heavily influence the developer's opinion of it.

Similar to Amazon's 1-click purchase, we need more: “Run now with 1 pip-install”.

Lightweight: Single node stack

The limitation of this data stack is of course scale.

However, I think it can help a lot of teams to build really simple data products.

Nowadays, you can rent large VMs for minimal cost, making these solutions viable for scales that are likely adequate for many use cases.

After all, for many business scenarios, you probably rarely need to query more than 10GB at once to run your dashboard or product.

Cloud managed services

What's great about the trend of using pip install is how easily it integrates everywhere, particularly with managed services of Cloud providers.

Snowflake initiated this trend by building their cloud warehouse on top of S3.

The “pip install wave” continues to push toward that direction, where tools focus on their unique code contributions while leaving the peripherical features to managed services maintained by hundreds of engineers at AWS.

Let’s take the ELT example.

Instead of setting up Airbyte on a Kubernetes cluster, you can run dlt inside a lambda:

Logging and observability are handled by CloudWatch

Advanced retry strategies by Step Functions

State management by DynamoDB

Similarly to how Snowflake leverages S3's 99.99% data availability and 99.999999999% durability, these are all features that data tool builders can leverage without needing to rebuild them, essentially getting them "for free".

Thanks for reading,

-Ju

I would be grateful if you could help me to improve this newsletter. Don’t hesitate to share with me what you liked/disliked and the topic you would like to be tackled.

P.S. You can reply to this email; it will get to me.

I like the graph of how cloud service replace the docker and other infrastructure, clearly visualizing a fact: you don’t need a complex data stack to drive your business.

Love it - writing one about MDS as code myself.