Polaris & Multi-Engine Data Stack

Ju Data Engineering Weekly - Ep 71

Bonjour!

I'm Julien, freelance data engineer based in Geneva 🇨🇭.

Every week, I research and share ideas about the data engineering craft.

Not subscribed yet?

This week, I finally found time to explore Polaris, the open-source (link) Iceberg catalog released by Snowflake a couple of weeks ago.

The project is designed to enable “interoperability with Amazon Web Services (AWS), Confluent, Dremio, Google Cloud, Microsoft Azure, Salesforce, and more.”

I wanted to go beyond the media articles reposted everywhere on LinkedIn and understand what it entails.

Let’s dive in.

Note: If you need a short recap about Iceberg and why you need a catalog, check this article:

Polaris vs Snowflake

This was my first surprise: Polaris and Snowflake’s Catalog are separate entities.

When you create an Iceberg table in Snowflake, you have two options:

Use Snowflake as the catalog:

You have full read/write access to the table from within Snowflake.

Use an external catalog integration:

The catalog is managed externally, but Snowflake can access the data in a read-only mode.

I initially thought that the Snowflake catalog and Polaris would merge into a single catalog with full read/write compatibility from anywhere.

However, from Snowflake’s standpoint, Polaris is considered an external catalog outside your account.

Hosting Polaris

There are two ways to host Polaris: self-hosting or using the managed version provided by Snowflake.

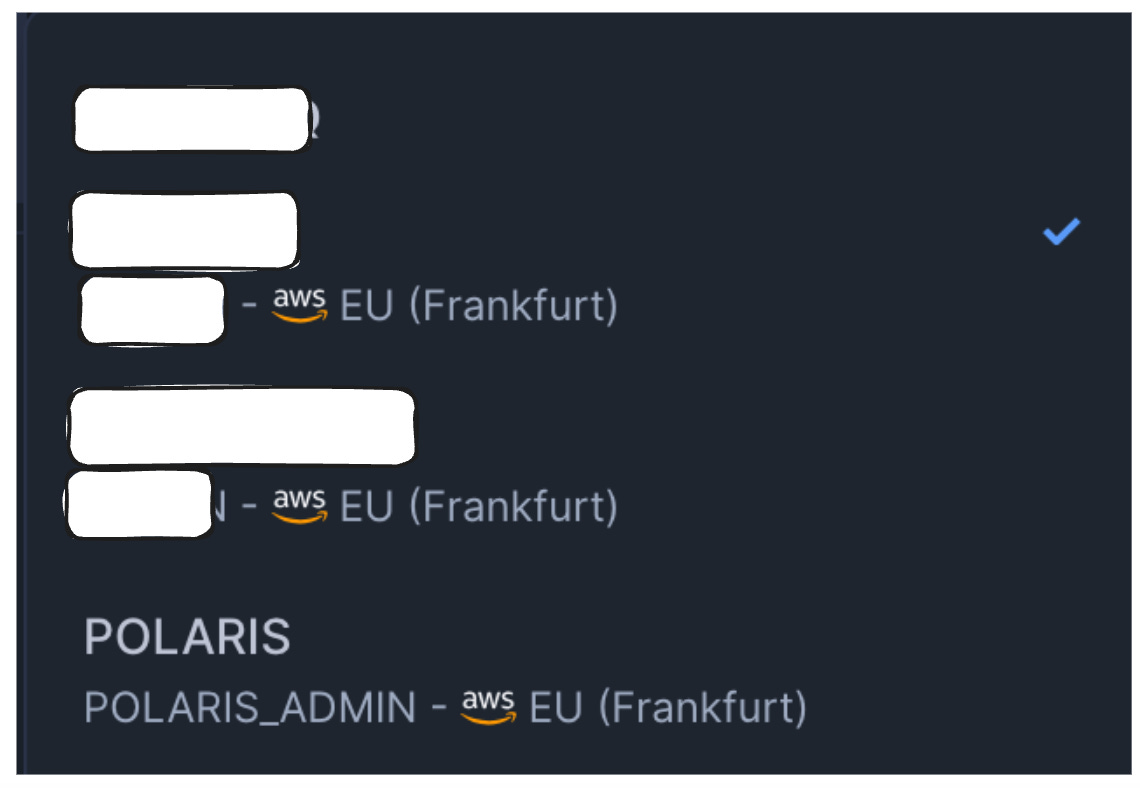

Managed by Snowflake

The managed Polaris is a specialized type of Snowflake account.

You need to create a new Snowflake account alongside your main account.

You won’t see the classic Snowflake console when logging into this account.

Instead, you’ll access an interface for managing catalogs, connections, and role management.

Self-hosting

Polaris can be deployed via a lightweight Docker image or as a standalone process.

I haven’t tried deploying it, but I did run the project locally.

After a simple docker run, Polaris starts up and exposes a REST API.

The model here is quite similar to open-source projects like dbt.

With dbt Core, you have the core engine you need to run yourself, while dbt Cloud manages dbt for you and provides a user interface.

Similarly, with Polaris, the open-source version provides only an API, while the Snowflake-managed version includes the UI.

As far as I know, both versions have the same features.

Let’s now explore the various integration scenarios for Iceberg tables via Polaris.

Read/Write Scenarios

Scenario 1: Read a Snowflake-managed Iceberg table from an external engine.

Snowflake can only write to Iceberg tables managed by its internal catalog.

Polaris enables access to these tables from other engines.

However, they are not directly integrated into Polaris; they must be “synced” to a Polaris external catalog that other engines will access.

The setup is relatively complex.

Below, I’ve mapped out all the resources that need to be created:

Although setting this up is a one-time process, it is cumbersome, and I hope it will be simplified in the future.

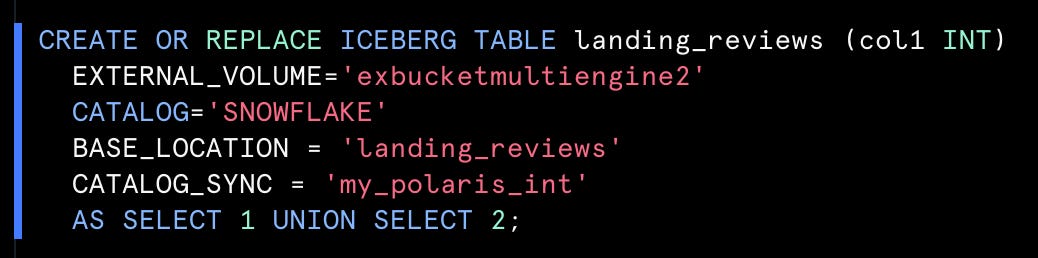

After spending some time copy-pasting parameters everywhere, I managed to create an Iceberg table inside Snowflake:

And see it in Polaris:

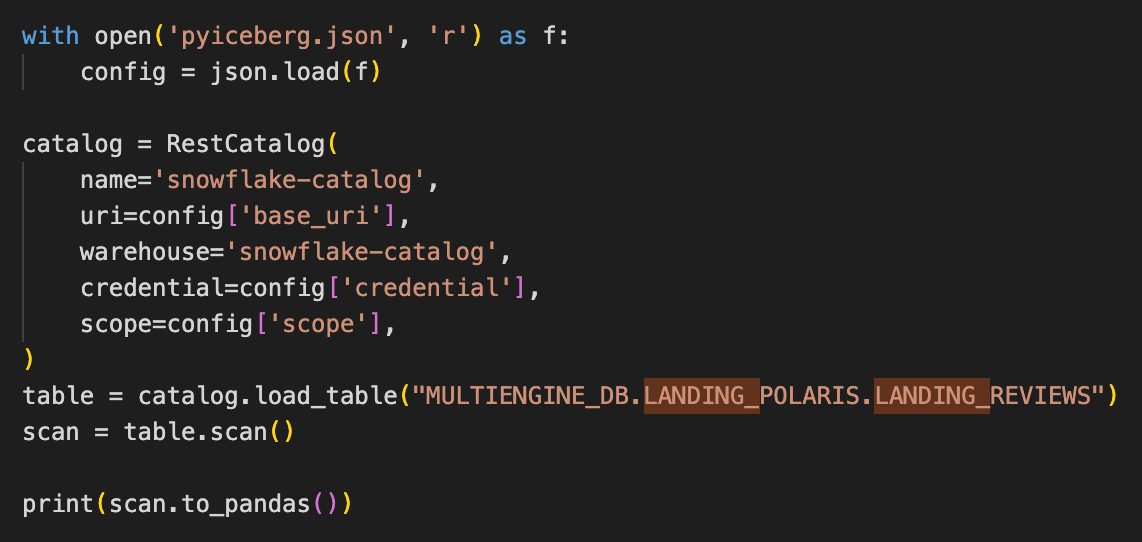

From there, you can query it using any engine.

Pyiceberg for example:

Scenario 2: Write from an external engine to a Snowflake Iceberg table

This use-case is not supported. The previously created Snowflake-managed table cannot be written from an external engine…

Scenario 3: Write from an external engine to Polaris

After creating a catalog and an integration (‘service connection’), you can write to Polaris using PyIceberg:

Scenario 4: Read a table in Polaris from Snowflake

Here again, the integration is not direct.

You need first to create an Iceberg table in Snowflake pointing to the Polaris table and then query this new table:

Note: The Iceberg table is not automatically refreshed; you need to refresh it before querying the data (I think this could potentially be automated using S3 events, though)

ALTER ICEBERG TABLE polaris_iceberg_table REFRESH ;Scenario 4: Write to a Polaris table from Snowflake

This use-case is unsupported: you cannot write from Snowflake to a Polaris table in a regular catalog.

To summarize:

Read Compatibility is supported both ways:

From an external engine to a Snowflake-managed Iceberg table.

From Snowflake to a table in Polaris.

Write Compatibility is not supported:

Snowflake can write to Snowflake-managed Iceberg tables only.

External engines can only write to Polaris tables only.

Currently, the added values of Polaris are for me:

1. Provide read-only access to Snowflake-managed Iceberg tables from external engines.

2. Offer a central catalog that consolidates all Iceberg tables managed by external engines and Snowflake, as opposed to having two distinct catalogs as described in this setup:

Multi-engine stack

Following up on this recent post, I tried to draft how a workflow would look in a multi-engine stack orchestrated with a single dbt/SQLMesh project.

This is hard to realize today as Iceberg integration does not exist in dbt/SQLMesh, but I listed in orange the pre-hook and post-hook operations that would need to be done.

The space is new, and a lot of things are still missing.

However, it’s interesting how fast it evolves, and Polaris is a step in the right direction.

Thanks for reading,

-Ju

I would be grateful if you could help me to improve this newsletter. Don’t hesitate to share with me what you liked/disliked and the topic you would like to be tackled.

P.S. You can reply to this email; it will get to me.